Picture this scene, your CEO asks, “Are our hiring algorithms biased?” Years ago, a vague assurance might have been enough. Today, this question demands a clear, defensible answer. Consider the Chicago retailer whose résumé screener silently excluded seven ZIP codes. Executives were shocked until an auditor explained it was a proxy for college-athletics data. The solution wasn’t a simple fix; it required rewriting old sourcing logic. This isn’t a hypothetical scenario anymore. With New York City’s Local Law 144 and the looming EU AI Act, using AI in recruitment creates direct corporate liability. This article offers a hands-on guide for Talent Acquisition Heads, Legal Counsel, and Vendor Selection Committees.

Image generated with gpt4o

Image generated with gpt4o

We will first dissect the legal landscape. Then, we will unpack the modern AI auditing toolkit—data lineage graphs, synthetic-candidate testing, and explainability dashboards. We will also define the critical role of the fairness engineer. Finally, we’ll equip you with the questions needed to cut through vendor hype and ensure your AI tools are a competitive advantage, not a compliance nightmare.

II. The Legal Imperative: Why “Black Box” AI is Now a Liability

The shift from ethical best practices to legal mandates is undeniable. Regulators no longer tolerate opaque AI systems, especially in high-stakes areas like employment. For companies operating in major jurisdictions, understanding these new rules is not optional—it is essential to avoid significant financial and reputational damage.

2.1. NYC Local Law 144: Mandating Bias Audits

New York City has taken decisive action with its Local Law 144, a regulation with sharp teeth. This law targets any “Automated Employment Decision Tool” (AEDT). This broad category covers computational processes that largely assist or replace human judgment in hiring or promotion decisions. If your company uses an AEDT to screen candidates for jobs in New York City, the law’s requirements are explicit:

-

Annual Independent Bias Audit: You must conduct an annual, independent bias audit. This audit specifically checks for disparate impact based on race/ethnicity and sex.

-

Public Disclosure: The audit results are not just for internal review. They must be published clearly on your company’s website.

-

Increased Scrutiny: This public disclosure is a game-changer. It opens your hiring practices to scrutiny from candidates, the press, and competitors.

-

Severe Penalties: Non-compliance carries escalating penalties. Fines can reach up to one thousand five hundred dollars ($1,500) per violation, per day. For a high-volume hiring tool, this financial risk is substantial.

2.2. The EU AI Act: Classifying AI Hiring as High-Risk

Across the Atlantic, the European Union is implementing an even broader approach with its landmark EU AI Act. This regulation classifies AI systems based on risk. Crucially, systems used for “recruitment and selection of natural persons” are unequivocally designated as “high-risk.” This classification triggers stringent obligations for any company deploying such tools within the EU market.

Key demands of the EU AI Act for high-risk systems include:

-

Robust Documentation: Comprehensive records of the AI system’s design, development, and performance.

-

Clear User Instructions: Ensuring users understand how to operate the AI and its limitations.

-

Human Oversight: Mechanisms for human intervention and control.

-

High Accuracy and Cybersecurity: Measures to ensure the system is reliable and secure.

These core principles drive the Act: safety, transparency, and the protection of fundamental rights. For HR and talent acquisition, this means any AI vendor you partner with must provide exhaustive evidence of their system’s fairness and technical soundness. The era of accepting a vendor’s “proprietary and confidential” excuse for a lack of transparency is definitively over.

2.3. The Core Requirement: Demonstrating and Explaining Results

One common thread weaves through these regulations: the burden of proof. It is no longer enough to simply run an audit or claim your system is fair. Organizations must be prepared to demonstrate fairness and explain how their AI tools arrive at specific outcomes. This demands a deeper level of technical and procedural rigor than most companies are accustomed to. It requires a new toolkit for this new era of compliance.

Callout Box: Key Employer Obligations

Under NYC Local Law 144:

- Conduct annual independent bias audits for race/ethnicity and sex.

- Publicly post a summary of the most recent audit results on your careers page.

- Provide notice to candidates that an AEDT is being used in the assessment.

Under the EU AI Act (for High-Risk Hiring Systems):

- Establish a comprehensive risk management system.

- Ensure high-quality data governance to minimize bias.

- Maintain detailed technical documentation and logging capabilities.

- Guarantee human oversight is possible and effective.

Now that we understand the legal landscape, let’s explore the powerful tools available for auditing AI in hiring.

III. Unpacking the Auditor’s Toolkit: Three Layers of Scrutiny

To meet these new legal demands, organizations must move beyond simple statistical checks. A robust audit requires a multi-layered approach. This approach examines not just the outputs of an AI model, but its inputs and its internal logic. Think of it as a three-pronged investigation: tracing the evidence, running a sting operation, and interrogating the suspect.

3.1. Data Lineage Graphs: Tracing the Family Tree of Your Data

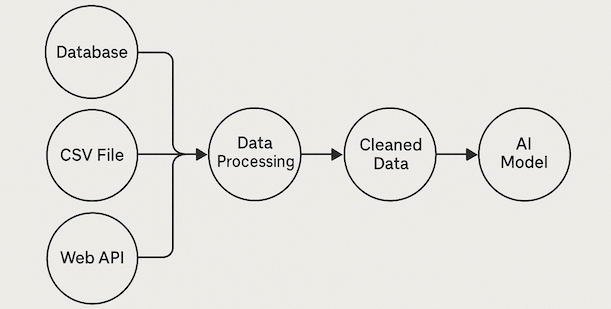

Before auditing an algorithm, you must understand the data that feeds it. Data lineage graphs provide a visual map of your data’s journey. This journey goes from its original source, through various transformations and systems, to the final point where an employment decision is influenced. Consider it the “family tree” for a piece of data. This map is foundational because bias often enters the system long before the data ever reaches the AI model. For instance, was the data sourced from a platform with skewed demographics? Was it “cleaned” in a way that inadvertently removed qualified candidates from non-traditional backgrounds?

A data lineage graph makes these pathways transparent. It shows, for example, how a zip code from an applicant form (Source) is fed into an ATS (Transformation), combined with third-party demographic data (Integration), and ultimately used as a feature in a candidate-ranking model (Decision). By tracing this path, an auditor can pinpoint the exact stage where a problematic proxy variable—like the zip codes that stood in for a protected class in the Chicago retailer’s case—was introduced. Without this map, you are merely auditing the final symptom, not the root cause.

Example of a simplified data lineage graph showing data flow from source to AI model

Example of a simplified data lineage graph showing data flow from source to AI model

3.2. Synthetic-Candidate Testing: Proactively Finding Bias

While data lineage looks backward, synthetic-candidate testing looks forward. It proactively probes the AI for weaknesses. This technique involves creating many realistic but fictional candidate profiles. These “synthetic” candidates are identical in qualifications but differ systematically across protected attributes like gender, race, age, or disability status. For example, you could generate two identical résumés, one with the name “John Miller” and another with “Jamal Miller.” Or, you could change a graduation year to imply a different age.

These synthetic profiles are then run through the AI hiring tool. The power of this method lies in its controlled nature. If the AI consistently ranks “John” higher than “Jamal,” or flags the profile with a female-coded name for a different role, you have a clear, causal signal of bias. This is far more precise than trying to infer bias from noisy, real-world historical data. It allows you to stress-test the system for specific prejudices without compromising the privacy of real applicants. This creates a powerful, proactive method for discovering disparate outcomes before they impact a single real candidate.

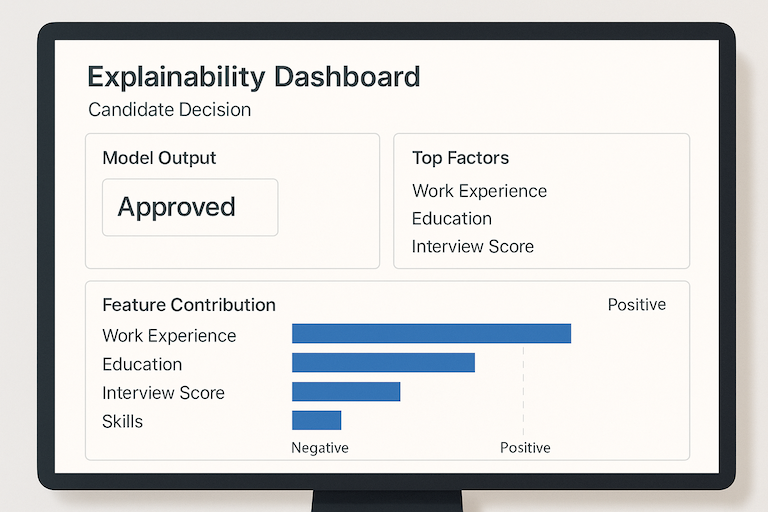

3.3. Explainability Dashboards (SHAP/LIME): The AI Nutritional Label

The final layer of scrutiny answers the “why” question for a single decision. If a candidate is rejected, what factors drove that outcome? This is where explainability methods like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) become essential. Think of them as a nutritional label for an AI’s decision. Instead of calories and fat, they show which inputs—like “years of experience,” “specific keyword in résumé,” or “university ranking”—contributed most heavily to the outcome.

LIME is excellent for explaining a single prediction. It provides a localized, understandable reason for an individual result. SHAP goes a step further, offering both local explanations and a global view of which features are most important across all decisions. An explainability dashboard built on these methods can show a recruiter or auditor that a candidate was flagged because they lacked a key skill, not because of a proxy for their age or gender. This ability to generate a human-readable reason for an automated decision is no longer a “nice-to-have”; it’s a core requirement for transparency and defensibility. A robust audit stack leverages all three tools—lineage, synthetic testing, and explainability—as they are not mutually exclusive, but deeply complementary.

Mock-up of a simplified explainability dashboard for a candidate decision

While these tools are powerful, technology alone is not enough. Next, let’s explore the crucial human element in AI auditing.

IV. The Human Element: Rise of the ‘Fairness Engineer’

Powerful tools require skilled operators. The complexity of AI auditing—at the intersection of data science, law, and human resources—has given rise to a new, critical role: the Fairness Engineer or AI Ethicist. This is not just a data scientist with a conscience; it is a specialist tasked with systematically embedding ethical and legal considerations into the very fabric of a company’s AI systems.

4.1. Defining the Fairness Engineer Role

The Fairness Engineer acts as a translator and a bridge. Their key responsibilities include:

-

Translating Regulations: Interpreting abstract fairness principles and complex regulations (like the EU AI Act) into concrete technical requirements for data science teams.

-

Legal Collaboration: Working with legal counsel to understand compliance obligations.

-

HR Partnership: Collaborating with HR to grasp the nuances of the hiring process.

-

Vendor Scrutiny: Partnering with vendors to scrutinize their methodologies.

-

Operationalizing Fairness: Running audits using tools like SHAP and synthetic testing.

-

Interpreting Results: Explaining audit results for non-technical stakeholders.

-

Recommending Remediation: Proposing concrete steps when bias is detected.

4.2. Responsibilities and Skills

A successful Fairness Engineer possesses a rare hybrid of skills. They must be quantitatively adept, capable of rigorous statistical tests and understanding machine learning models. Yet, they also need strong qualitative and strategic skills. Key responsibilities include:

-

Ethical Impact Assessments: Conducting assessments before an AI tool is deployed.

-

Defining Fairness: Facilitating cross-functional discussions to define what “fairness” means for the organization.

-

Lifecycle Integration: Ensuring ethical guardrails are integrated throughout the AI lifecycle, from design to deployment and ongoing monitoring.

4.3. In-House vs. Outsourced Expertise

For many organizations, the immediate question is whether to build this capability in-house or rely on third-party consultants. Outsourcing can provide immediate access to deep expertise. This is crucial for conducting the “independent” audits required by laws like NYC’s LL 144. However, relying solely on external parties is a strategic risk. Long-term, organizations need to cultivate internal expertise to manage the auditing process effectively, hold vendors accountable, and build a sustainable culture of responsible AI. The ideal model is often a hybrid: using external auditors for validation while building an internal fairness function to own day-to-day governance.

With the right tools and people in place, the next challenge is navigating the vendor landscape, especially with the rise of LLM-powered copilots.

V. The Vendor Minefield: Decoding Copilots and Compliance-Washing

In response to new regulations, AI vendors are flooding the market. All claim their solutions are compliant, unbiased, and transparent. The reality is a vendor minefield, where true innovation is mixed with “compliance-washing”—superficial features that provide the illusion of fairness without the underlying rigor.

5.1. The Vendor Land Grab: A Critical Perspective

Talent acquisition leaders are now inundated with pitches for AI-driven sourcing, screening, and assessment tools. The pressure to adopt these technologies for a competitive edge is immense. However, a critical perspective is essential. Many vendors are simply bolting on features to meet the letter of the law without embracing its spirit. This leaves their clients exposed.

5.2. The Risk of LLM-Based Copilots: A New Frontier for Bias

The latest and most significant challenge is the rapid integration of Large Language Model (LLM) “copilots” into existing Applicant Tracking Systems (ATS). These tools, often powered by models like GPT-4, promise to streamline workflows by generating job descriptions, summarizing résumés, or drafting candidate communications. The risk here is immense. Even if a vendor’s core matching algorithm has been meticulously audited, these new LLM layers represent a vast, unaudited surface area for bias to creep back in.

Consider this: LLMs are trained on enormous amounts of internet text, which is rife with societal biases. Without careful fine-tuning and rigorous testing, an LLM résumé summarizer might learn to downplay experience from non-prestigious universities or use subtly gendered language when describing candidates. It could penalize qualified individuals with non-traditional career paths or unconventional résumé formatting. For example, Amazon famously decommissioned its AI recruitment tool when it showed bias against women, a stark reminder of these risks. These copilots can effectively re-encode the very biases that formal audits were designed to eliminate. This creates a dangerous backdoor for discrimination that is incredibly difficult to trace.

5.3. 10 Critical Questions to Ask Your AI Vendor

To cut through the noise and assess true vendor readiness, your selection committee needs to ask sharp, specific questions. Generic assurances are not enough:

-

Can you provide the full, unredacted results of your latest independent bias audit, as required by NYC Local Law 144? (Demand to see the actual report, not a marketing summary.)

-

How do you audit your new LLM-based features (like résumé summarizers or job description generators) separately from your core matching algorithms? (This is a new surface area and requires its own distinct audit.)

-

What specific explainability methods (e.g., SHAP, LIME) do you provide for individual hiring decisions? Can we see a demo? (A “yes” without a demonstration is a red flag.)

-

What specific type of AI technology drives your features? Is it a classification model, a generative LLM, or something else? (This helps you understand the risk profile.)

-

What is your detailed process for complying with the EU AI Act’s “high-risk” system requirements? (Ask for documentation on their risk management and data governance frameworks.)

-

Can we disable specific AI features for certain users, roles, or jurisdictions to manage our compliance risk? (Granular control is essential for phased rollouts and risk mitigation.)

-

What training data was used for your models, and what steps did you take to mitigate bias within that data? (Look for details on data sources, balancing, and fairness-aware training.)

-

How do you monitor for model drift and performance degradation over time? (A one-time audit is insufficient; continuous monitoring is key.)

-

What level of human oversight and intervention is built into the workflow? (The system should assist, not completely replace, human judgment.)

-

What contractual liabilities do you accept if your tool is found to be discriminatory? (This question reveals how much confidence they truly have in their product.)

Now that you’re equipped to evaluate vendors, let’s look at a step-by-step action plan.

VI. Your Action Plan: A 3-Step Framework for Getting Started

Navigating this landscape seems daunting, but a structured approach can make it manageable. Here is a practical, three-step framework to begin building a robust AI governance program for your hiring pipeline:

6.1. Step 1: Assemble Your Cross-Functional Team

AI compliance is not just an HR or legal problem; it is an organizational challenge. Your first step is to assemble a dedicated, cross-functional team. This group must include representatives from:

-

Legal: To interpret regulations.

-

Talent Acquisition: To map the real-world hiring process.

-

IT/Data Science: To understand the technical underpinnings.

This team will be responsible for driving the initiative forward.

6.2. Step 2: Conduct an Inventory

You cannot audit what you do not know you have. The team’s first task is to conduct a comprehensive inventory of every automated or AI-assisted tool in the hiring and promotion pipeline. Map every system, from sourcing bots and résumé screeners to video interview analysis and skills assessments. For each tool, identify the vendor, the decisions it influences, and whether it qualifies as an AEDT under regulations like LL 144.

6.3. Step 3: Begin Vendor Dialogue

With your inventory in hand, it’s time to engage your vendors. Do not wait for them to send you a compliance update. Proactively reach out with the list of critical questions from the previous section. Schedule meetings to review their audit reports, see demos of their explainability features, and understand their roadmap for LLM governance. Their answers—or lack thereof—will be your single best indicator of their maturity and your potential risk.

Content Upgrade: Ready to start the conversation? Download our free, comprehensive AI Vendor Audit Checklist to take into your next meeting.

VII. Conclusion

Auditing AI in your hiring pipeline is no longer an academic exercise; it is a strategic and legal necessity. The challenge is complex, but it is not insurmountable. It requires a multi-layered approach. This approach combines sophisticated technical tools, dedicated human expertise in the form of fairness engineers, and rigorous vendor due diligence. Companies that treat this as a mere compliance checkbox will inevitably fall behind, exposing themselves to legal penalties and reputational harm.

However, organizations that embrace this challenge strategically will discover a significant competitive advantage. By building transparent, fair, and explainable AI systems, you not only mitigate risk but also build trust with candidates, improve the quality of your hires, and cement your position as a leader. This is the new pillar of corporate responsibility in the age of AI—the work must begin now. We encourage you to share this guide with your legal and HR counterparts to start this critical conversation.